Nowadays, Google Shopping is one of the most popular digital marketing methods for e-commerce companies. However, it’s tough for them to keep their product data feed up to date manually.

Although it might be more convenient to use a solution from the site’s e-commerce platform or 3rd party, it is usually not free and/or may not be customisable.

In this post, we are going to show you how to create a product data feed by using your own free solution scraping data from your website with Google Apps Script (GAS).

Table Of Contents

- Create a feed in Google Merchant Center

- Modify The Google Sheets

- Scraping Data From Your Website

- Set Up Triggers to Run the Script on a Regular Basis

Create a Feed on Google Merchant Center

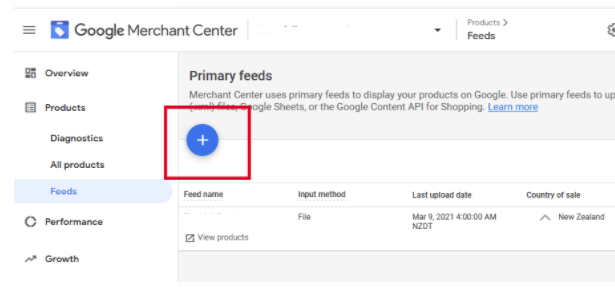

First of all, you need to create a feed on Google Merchant Center. After signing in to Merchant Center, click “Products” > “Feeds” on the left side menu. And then, click the “+” button in the “Primary feeds” form:

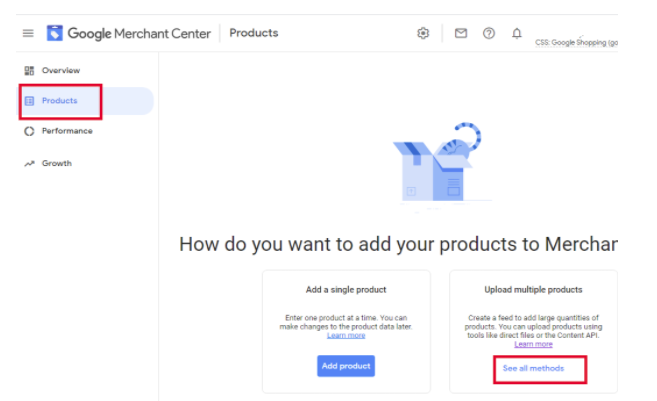

If you create a feed for the first time, click “Products” on the left side menu and click “See all methods” on the main window:

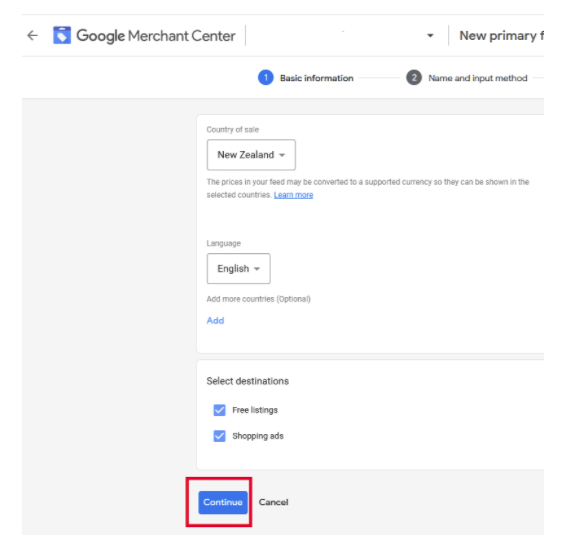

Select the country of sale and language and click “Continue”:

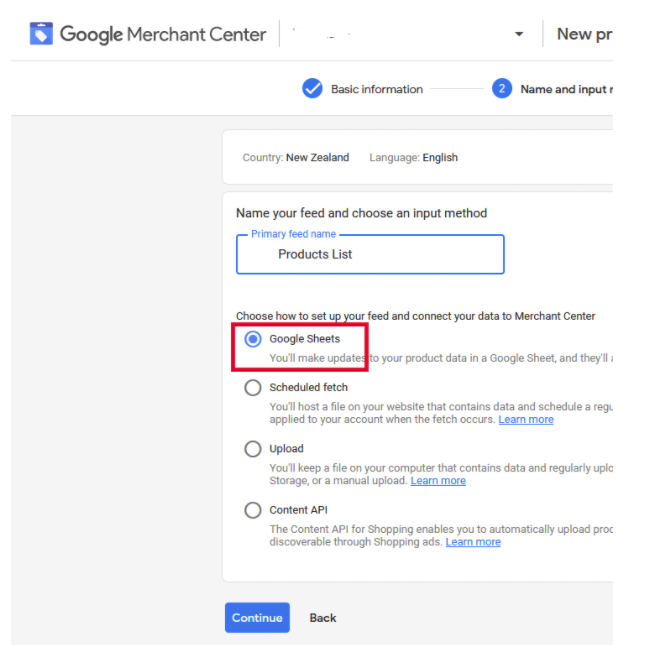

On the next page, you enter a name for your feed and select “Google Sheets”:

Next, select “Generate a new Google spreadsheet from a template”. You don’t have to change the upload schedule yet:

You should now see a new feed in Google Merchant Center.

Modify The Google Sheets

After having created the Google Sheets, you will need to make the following changes:

First of all, add links to the product pages in column D:

Next, create a new sheet named “log” and write “Date” in A1, “Latest Row” in B1 and “1” in B2:

Scraping Data from Your Website

Finally, let’s get to the main topic. All you have to retrieve is the data below:

- Id: product sku

- Title: product name

- Description: product description

- Link: product page URL

- Condition: new, used, refurbished

- Price : price

- Availability: in stock , out of stock, preorder

- Image_link: product image’s URL

- Gtin: Global Trade Item Number (OPTION)

- Mpn: myeloproliferative neoplasms (OPTION)

- Brand: brand name

- Google_product_category: category (OPTION)

Specify the path of the text

You need to specify the path of the text you extract from the page. If you use Google Chrome, you can find the path by following these steps:

- Right click on the text > Inspection

- Right click on the ‘Element’ panel > ‘Copy’ > ‘Copy JS path’

- Paste the data on text editor, the code is like ‘document.querySelector(“.maincontent > div > div.page-title-wrapper.product > h1 > span”)’

- Extract a path (e.g .maincontent > div > div.page-title-wrapper.product > h1 > span) from the text editor.

Implement Scripts

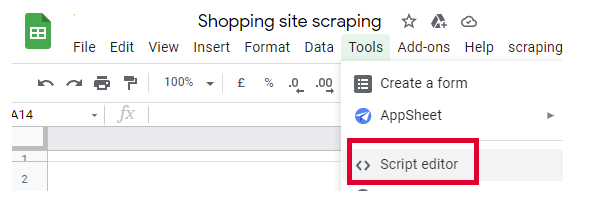

You can implement scripts with ‘Script Editor’. In Google Sheets, select “Tools” tab > “Script editor”:

Copy the following source code and paste it in the right-side panel:

Source code

const FILEKEY = 'xxxxxxxxxxxxxx';

const PARAMS = [

{'key':'id', 'path':'.id'},

{'key':'title', 'path':'h1.title'},

{'key':'description', 'path':'.productDescription'},

{'key':'link', 'path':'h1.title'},

{'key':'condition', 'path':'.productCondition'},

{'key':'price', 'path':'.priceValue'},

{'key':'availability', 'path':'.productStock'},

{'key':'image', 'path':'img.src'},

{'key':'gtin','path':'test'},

{'key':'mpn','path':'test'},

{'key':'brand','path':'.productBrand'}

];

const MAXROWS = 1000;

const BOOK = SpreadsheetApp.openById(FILEKEY);

const SHEETNAME ='Sheet1';

const LOGSHEET = 'log';

const DEFAULT_STARTROW = 2;

function main (){

var sheetData = book.getSheetByName(SHEETNAME);

var startRow = getLatestRow();

startRow = (startRow >= sheetData.getLastRow()) ? DEFAULT_STARTROW : startRow;

var urls = getURLs(sheetData);

var results =[];

for(var i = 0; i < urls.length; i++){

var result = scrape(urls[i]);

results.push(result);

}

writeResult(sheetData,results, startRow, 1);

}

function getLatestRow(){

var sheet =book.getSheetByName(LOGSHEET);

var row = sheet.getRange(sheet.getDataRange().getLastRow(),2,1,1).getValue();

return row;

}

function setLatestRow(row){

var sheet =book.getSheetByName(LOGSHEET);

var result = [[formatDateTimeAsString(new Date()),row]];

sheet.getRange(sheet.getDataRange().getLastRow()+1,1,result.length, result[0].length).setValues(result);

}

function getURLs(sheetData){

var ret = [];

var urls = sheetData.getRange(2,4,sheetData.getDataRange().getLastRow(),1).getValues();

for(var i = 0; i < urls.length; i++){

if(i < MAXROWS){

ret.push(urls[i][0]);

}else{

break;

}

}

return ret;

}

function scrape(url){

const postheader = {

"useragent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.116 Safari/537.36",

"accept":"gzip, */*",

"timeout":"20000"

}

var result = [];

if(!(url.length> 0 && url.indexOf('http') >= 0)){

result = ['','','',url,'','','',''];

return result;

}

try{

var content = UrlFetchApp.fetch(url).getContentText("UTF-8");

var $ = Cheerio.load(content);

}catch(e){

result = ['','','',url,'','','',''];

return result;

}

PARAMS.forEach(function(param){

var attribute = '';

try{

key = param['key'];

path = param['path'];

var element = $(path);

if(key == 'link'){

attribute = url;

}else if(key == 'image'){

attribute = element.attr('src');

}else if(key == 'price'){

// Price: $49.00 NZD

attribute = element.text();

attribute = attribute.replace('Price:','').replace('$','').replace('NZD','').trim();

}else if(path == 'test'){

attribute = '';

}else{

attribute = element.text();

}

}catch(e){

Logger.log(e);

}

result.push(attribute);

});

return result;

}

function writeResult(sheetData,results, startRow, startColumn){

if(results.length > 0){

var outputRange = sheetData.getRange(startRow, startColumn, results.length, results[0].length);

outputRange.setValues(results);

setLatestRow(startRow+results.length);

}

}

function formatDateTimeAsString(d) {

var dateString = Utilities.formatDate(d, 'GMT+12:00', 'yyyy/MM/dd HH:mm:ss');

return dateString;

}

Constant Variables

const FILEKEY = 'xxxxxxxxxxxxxx'; “FILEKEY” is a part of the URL of the sheets. Retrieve the URL from after “/d/” as highlighted below:

const PARAMS = [

{'key':'id', 'path':'.id'},

{'key':'title', 'path':'h1.title'},

{'key':'description', 'path':'.productDescription'},

{'key':'link', 'path':'h1.title'},

{'key':'condition', 'path':'.productCondition'},

{'key':'price', 'path':'.priceValue'},

{'key':'availability', 'path':'.productStock'},

{'key':'image', 'path':'img.src'},

{'key':'gtin','path':'test'},

{'key':'mpn','path':'test'},

{'key':'brand','path':'.productBrand'}

];

“PARAM” array contains the path of texts you would like to retrieve from the product pages. Replace each value of ‘path’ to the path you get from the pages. For example,

Before: {‘key’:’title’, ‘path’:’h1.title”},

After: {‘key’:’title’, ‘path’:’.maincontent > div > div.page-title-wrapper.product > h1 > span’},

Function main

This function runs for the following tasks.

- Obtain a sheet object to write a result.

- Extract a series of URLs to retrieve.

- Retrieve data from the URLs and put them into the sheet and last row number into the LOG sheet.

getLatestRow / setLatestRow

You can keep and retrieve the latest scraping row number from the sheet.

getURLs

This function returns a list of URLs. When this function can’t finish before timeout, please reduce the constant variable “MAXROWS”.

scrape

This function retrieves data from the product pages and returns them.

const postheader = {

"useragent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.116 Safari/537.36",

"accept":"gzip, */*",

"timeout":"20000"

} As a header is required for some websites, you may need to create a header. You can change the value of “useragent”.

if(!(url.length> 0 && url.indexOf('http') >= 0)){

result = ['','','',url,'','','',''];

Logger.log("url =" + url.length +"\t" + url.indexOf('http') +"\t" + url);

return result;

}

try{

var content = UrlFetchApp.fetch(url).getContentText("UTF-8");

var $ = Cheerio.load(content);

}catch(e){

result = ['','','',url,'','','',''];

return result;

}In this script, we scrape the page using cheerio-gasify . And it puts the the of the URL into $ variable.

If the URL is wrong or it can’t retrieve data for some reason, we insert an empty row.

PARAMS.forEach(function(param){

var attribute = '';

try{

key = param['key'];

path = param['path'];

var element = $(path);

if(key == 'link'){

attribute = url;

}else if(key == 'image'){

attribute = element.attr('src');

}else if(key == 'price'){

// Price: $49.00 NZD

attribute = element.text();

attribute = attribute.replace('Price:','').replace('$','').replace('NZD','').trim();

}else if(path == 'test'){

attribute = '';

}else{

attribute = element.text();

}

}catch(e){

Logger.log(e);

}

result.push(attribute);

});This function retrieves the data with the path and $ object. And it transforms the result into any format you like and puts them into an array.

You can change the code around transforming the data as you like.

(e.g attribute = attribute.replace('Price:','').replace('$','').replace('NZD','').trim();)Finally, it returns the two-dimensional array.

writeResult

Write the array data into the sheet.

Add Cheerio to libraries.

Before you run this script, you need to add “Cheerio” to the libraries.

- Click “Libraries” in the left panel.

- Enter script ID into the form. You can get the script ID from here.

Set Up Triggers to Run the Script on a Regular Basis

To run this script regularly, you need to set up triggers.

- Click “Triggers” in the left panel.

- Select the followings

- main

- Head

- Time-driven

- Hour timer

- Every hour

And we’re all done!

This script will run once every hour and write or update the “Sheet1” sheet. When something’s wrong with this, the error message will be written in the “log” sheet.